SUNRISE CRITICAL INFRASTRUCTURE SERIES

It is widely known that we are living through a historic moment in the field of artificial intelligence (AI), capturing the attention of the entire world with spectacular results across multiple application fields, especially in text and image generation. Visual Large Language Models (V-LLM), such as GPT-4 [1] (chatGPT) from OpenAI and Gemini [2] from Google, have impressed the technology community with their ability to understand both text and images and to answer questions related to both.

At SUNRISE, our project is focused on enhancing preparedness and resilience in the face of events like the coronavirus pandemic. By integrating advanced technology into our operational framework, specifically using unmanned aerial vehicles (UAVs) for critical infrastructure inspections, we aim to reduce the need for onsite personnel, thereby minimizing health risks during such crises. This strategic approach not only keeps our team safer but also ensures that infrastructure remains operational and inspected without disruption. To achieve this, we have implemented in our Visual Question Answering (VQA) module open-source Visual Large Language Models like BLIP-2 [3], SEAL VQA [4], or LLaVA1.6 [5]. These solutions have great potential because they allow us to respond to various tasks without the need for specific training for each one, thus creating a zero-shot UAV remote infrastructure inspection tool.

To better understand, let’s momentarily step into the shoes of an infrastructure operator. For instance, consider the individual responsible for inspecting a remote hydropower facility hidden in the Dolomites, like the one shown in Figure 1. As a maintenance manager, you must ensure that the plants are always operating to their full potential to maximize energy production. So, one of the things you have to check is that the intake grates of the weirs are not obstructed, which would prevent them from diverting the water to the hydropower plant.

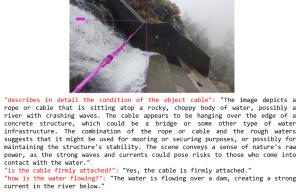

In this scenario, there are two options: the traditional one, which involves dispatching a team by helicopter to the area to carry out a visual inspection; or the innovative one, which involves launching a routine with a predefined inspection path via UAV, where you simply define the questions whose answers you seek in natural language (no-code), and the UAV is capable of autonomously performing the task. The question asked by the operator of the model is highlighted in red , and the responses from the VQA module are in black in the attached Figure 2 below.

As can be inferred from the text generated by the VLM model, maintenance work is necessary in this instance. Consequently, the maintenance team will need to be dispatched via helicopter. Had the grate not been blocked, inspection costs would have been significantly reduced, and the risk of workplace accidents minimized. However, since action must be taken and workers dispatched, you, as the final authority, want to ensure beforehand that the auxiliary safety infrastructure is in good condition to avoid endangering employees.

To achieve this, simply input the object you wish to analyze into natural language. In this case, it is the safety cable that allows workers to anchor themselves and prevent falls due to the water drop. Accompanied by a series of questions, the answers to which will aid in decision-making. As depicted in the previous figure, questions posed by the maintenance manager are highlighted in red, while responses from the virtual assistant appear in black in Figure 3.

By employing the UAV inspection module and leveraging the latest AI models, operators can be deployed seamlessly, eliminating uncertainties regarding the tasks they will face and the necessary equipment. This innovative approach eliminates the need to collect images to form datasets and retrain models for each specific task. It exemplifies how academic advancements can be directly applied to real-world scenarios.

This example, a real use case of Eviden’s tool at the facilities of its partner Hydro Dolomiti Energia (HDE), is just one of countless potential applications of this technology in the field of visual inspection and image analysis. The level of language comprehension and visual perception demonstrated by current V-LLM models suggests a bright future for these types of applications, where the limit is more in the imagination of the person using the tool and defining the questions than in the technology itself.

Written by: Mario Triviño, Eviden

References

- OpenAI (2023). GPT-4 Technical Report. Available at: https://arxiv.org/pdf/2303.08774.pdf, retrieved 2024-04-15.

- Rohan Anil, Sebastian Borgeaud, Jean-Baptiste Alayrac, et al., 2024. Gemini: A Family of Highly Capable Multimodal Models. Google. Available at: https://arxiv.org/abs/2312.11805, retrieved 2024-04-16.

- Li, J., Li, D., Savarese, S. & Hoi, S., 2023. BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models. Salesforce Research. Available at: https://arxiv.org/pdf/2301.12597.pdf, retrieved 2024-04-15.

- Wu, P. & Xie, S., 2023. V*: Guided Visual Search as a Core Mechanism in Multimodal LLMs. arXiv:2312.14135 [cs.CV], [online] Available at: https://arxiv.org/abs/2312.14135, retrieved 2024-04-02.

- LLaVA Team. (2024, January 30). LLaVA: The Next Chapter. Retrieved from https://llava-vl.github.io/blog/2024-01-30-llava-next/, retrieved 2024-04-16.

- Liu, S., Zeng, Z., Ren, T., Li, F., Zhang, H., Yang, J., Li, C., Yang, J., Su, H., Zhu, J. & Zhang, L., 2023. Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection. arXiv:2303.05499v4 [cs.CV], [online] Available at: https://arxiv.org/pdf/2303.05499.pdf, retrieved 2024-04-16.

- Ke, L., Ye, M., Danelljan, M., Liu, Y., Tang, C-K., Yu, F., Tai, Y-W., 2023. Segment Anything in High Quality. ETH Zürich & HKUST. Available at: https://arxiv.org/pdf/2306.01567.pdf, retrieved 2024-04-16.